RAPTOR: Recursive Abstractive Processing for Tree-Organized Retrieval

Source: Paper / Official GitHub

This is a paper from Stanford, officially titled RAPTOR: Recursive Abstractive Processing for Tree-Organized Retrieval. When it first came out earlier this year, I saw many people sharing it on Twitter, and it was also accepted by ICLR, making it quite valuable to read.

I assume readers already have basic RAG knowledge, so I won’t elaborate on that here and will dive directly into the core content of the paper.

Concept

The Problem This Paper Aims to Solve: Deficiencies of Simple RAG

Despite a diversity in methods, the retrieving components of models predominantly rely on standard approaches, i.e., chunking corpora and encoding with BERT-based retrievers. Although this approach is widely adopted, Nair et al. (2023) highlights a potential shortcoming: contiguous segmentation might not capture the complete semantic depth of the text.

The general RAG creation process includes: chunking text → building embeddings → when a query comes in, finding relevant text as context. However, when chunking text, semantic information loss in context is inevitable, leading to inability to find relevant text or incomplete information.

Therefore, the problem this paper aims to solve is: How to preserve semantic-level information as much as possible while chunking text.

Why Do We Still Need RAG as LLMs Accept Longer Context?

Why Retrieval? … However, as Liu et al. (2023) and Sun et al. (2021) have noted, models tend to underutilize long-range context and see diminishing performance as context length increases, especially when pertinent information is embedded within a lengthy context.

One of the current LLM development trends is that the tokens that can be input to LLMs are getting longer. For example: GPT-4o (128k), Claude 3.5 Sonnet (200k).

At this point, you might think, if we can simply feed all context to the LLM at once, why bother with RAG? Just stuff everything into the LLM and be done with it!

Of course, in practice, if you just want to demo a baseline version, this approach is certainly fine. However, if you have quality requirements for model output, you must know that overly long context has the following drawbacks:

- Longer context is harder for models to understand, resulting in poorer output quality.

- Longer context often comes with irrelevant content, which negatively impacts model understanding.

- Longer context is expensive and slow.

Based on these three points, RAG is still very suitable for LLM products! (Won’t be eliminated anytime soon)

Solution

Core Concept (Idea)

As mentioned earlier, the biggest problem with current simple RAG systems is: when splitting text, key information is easily lost, causing semantic incompleteness between text chunks, leading to imprecise retrieval.

So how do we preserve the lost key information?

The solution proposed in this paper is very concise: Through continuous clustering and summarization, create a hierarchical structure to preserve semantically similar information as much as possible.

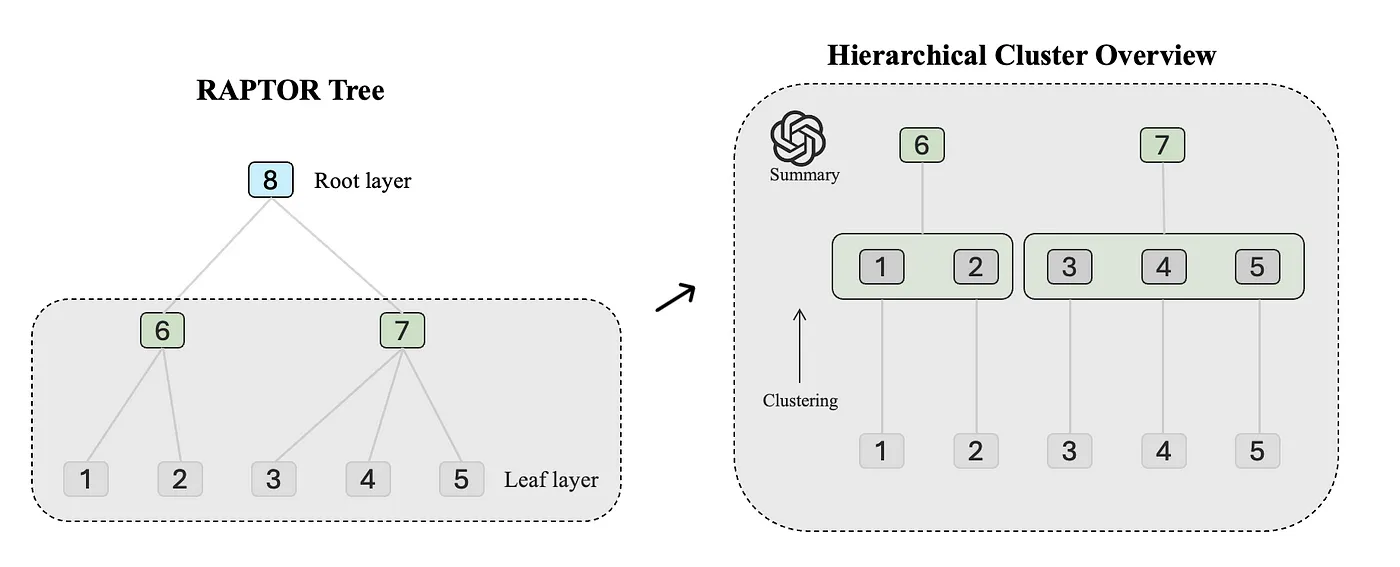

This paper’s method first clusters the leaf layer (the most original chunked text), then summarizes the text in each cluster separately, thereby generating new layer text (corresponding to 6, 7, 8 in the figure). This process is repeated continuously until reaching the preset layer limit, ultimately constructing a tree structure where each layer contains complete information from related text in the previous layer.

Simply put, the overall process is roughly as follows:

- First chunk the original text to get the first layer (leaf layer). Ex: one article → 5 chunks (1,2,3,4,5 in Figure 1)

- Cluster the text in that layer. Ex: 5 chunks → 3 clusters (gray areas in layer 2 of Figure 1)

- Summarize the text in each cluster to get the next layer’s text. Ex: 3 clusters → 3 new texts (3,5 in Figure 1 generate a summary to get new layer text 6)

- Repeat steps 2~3 until reaching Max layer. Ex: The maximum layer in Figure 1 appears to be 3 layers

Clustering

Clustering plays a key role in building the RAPTOR tree, organizing text segments into cohesive groups. This step groups related content together, which helps the subsequent retrieval process.

Clustering is the most important aspect of this RAPTOR paper. Essentially, the entire paper uses recursive clustering to generate a hierarchical text structure, allowing RAG systems to obtain richer context.

In implementation, to improve clustering effectiveness, the authors made some special treatments:

Soft Clustering

One of the unique aspects of our clustering approach is the use of soft clustering, where nodes can belong to multiple clusters without requiring a fixed number of clusters. This flexibility is essential because individual text segments often contain information relevant to various topics, thereby warranting their inclusion in multiple summaries.

In reality, a text certainly has more than one type. Therefore, the authors consider using soft clustering algorithms, which is Gaussian Mixture Model (GMM).

If you’re not familiar with GMM, that’s fine. GMM assumes that a text may come from multiple clusters, just with different probabilities of belonging to each cluster.

Dimension Reduction — UMAP

The high dimensionality of vector embeddings presents a challenge for traditional GMMs, as distance metrics may behave poorly when used to measure similarity in high-dimensional spaces (Aggarwal et al., 2001). To mitigate this, we employ Uniform Manifold Approximation and Projection (UMAP), a manifold learning technique for dimensionality reduction (McInnes et al., 2018).

The authors point out that GMM performs poorly in high-dimensional spaces because distance calculation accuracy decreases in high dimensions, and clustering essentially involves calculating distances. Therefore, the authors plan to reduce the dimensionality of embedded text, specifically using UMAP. (For example, text embedding originally at 768 dimensions, reduced to 20 dimensions using UMAP)

So in practice, how many dimensions should we reduce to?

Although the authors mention that poor clustering in high dimensions comes from related paper results, that paper doesn’t specify exactly how many dimensions should be used, but all their experiments were done at dimension 20, which can be used as a reference.

Global & Local Clustering

…it first identifies global clusters and then performs local clustering within these global clusters. This two-step clustering process captures a broad spectrum of relationships among the text data, from broad themes to specific details.

The authors designed a two-stage clustering method, first performing global clustering on one layer, then clustering again within each cluster separately. This not only captures high-level clusters but also takes care of detailed items within each cluster.

Simply put, two-stage clustering can capture more relationships between texts. Just understand the purpose of two-stage clustering here; for details, you can check the implementation.

Bayesian Information Criterion (BIC)

The most common problem in clustering is how many clusters to set?

The authors directly use Bayesian Information Criterion to calculate information scores to decide how many clusters to create. The calculation formula is:

\[BIC = -2 \ln(L) + k \ln(N)\]Where N represents the number of text segments, k represents the number of model parameters, and L is the maximum likelihood function value of the model.

Retrieval

The previous parts were about how to build the knowledge base; now let’s talk about how to retrieve.

There are two methods, each with advantages:

Tree Traversal (Tree Traversal)

Tree traversal. Simply put, given root text, find all its children as relevant text for retrieval.

Advantages: Uniform information granularity, obtained text includes broad and detailed text, rich and diverse information.

Disadvantages: Complex retrieval, more places need adjustment (like how many to take from each layer? How deep to go?)

In implementation, assume taking the most relevant text from each layer.

Collapsed Tree (Collapsed Tree)

Collapsed tree. This is simpler, directly flattening the tree and picking the most relevant text when all text is at the same level.

Advantages: Simple retrieval, suitable for answering specific questions (appropriate granularity)

Disadvantages: Uneven retrieval granularity, need to retrieve from every text, higher computational cost.

Which Retrieval Method Works Better in Practice?

The authors believe collapsed tree performs better on average than tree traversal. Because collapsed tree considers information relevance of all texts, the obtained information granularity is suitable for answering specific questions. In contrast, tree traversal, regardless of how you set the retrieval number for each layer, the proportion of coarse and fine text obtained remains unchanged.

Summary

- What problem does this paper solve? Text segmentation → semantic incompleteness → inaccurate retrieval

- Solution: Recursive clustering → generate multi-level text, preserve complete semantics

References

Enjoy Reading This Article?

Here are some more articles you might like to read next: